Speed up your application¶

The following sections will give you some advice about optimizing rendering times in your stories.

Design a fast Toucan Toco application is an incremental process and requires to be patient and adjust the actions you are doing.

Choose your data architecture : Live data VS Load data approach¶

Live data¶

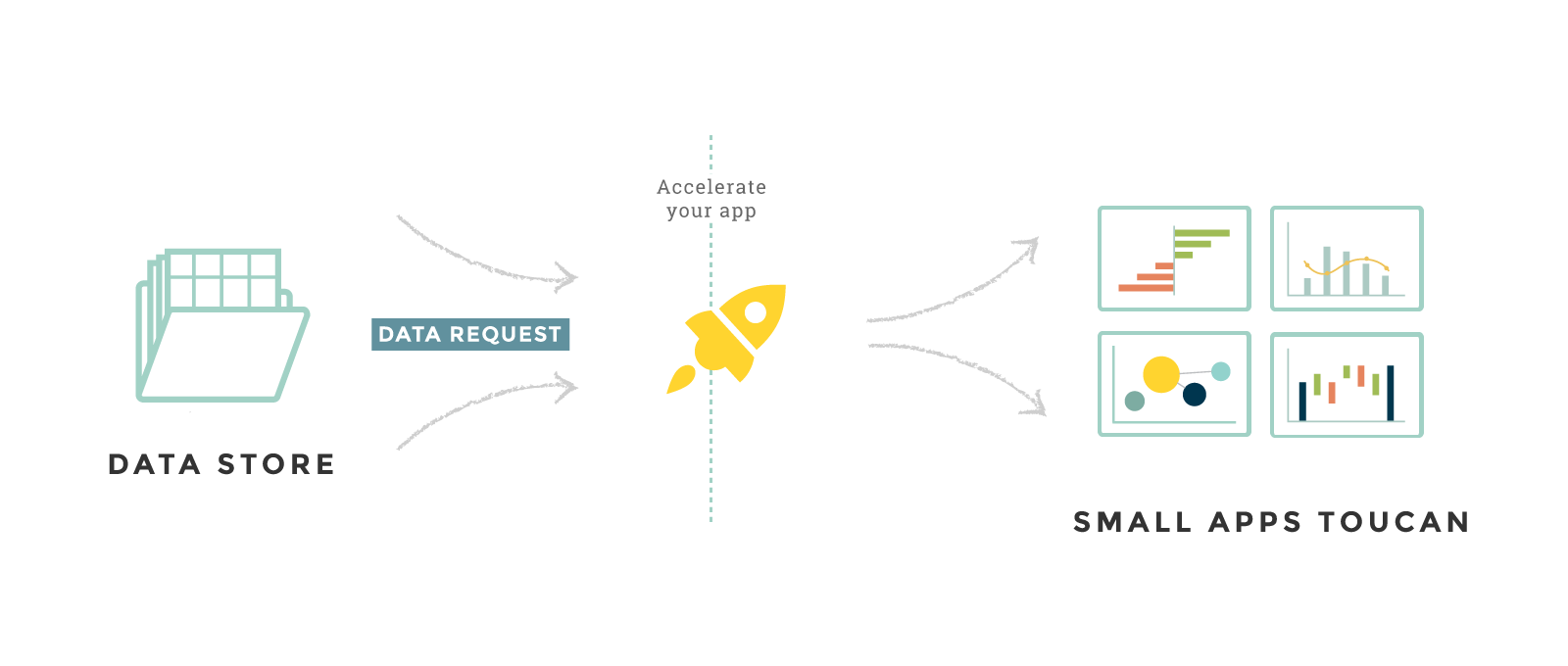

Live data allows you to display data directly from your data infrastructure without having your data stored in MongoDB collections in Toucan.

Each time you navigate to a Toucan Toco story, a data request will be forwarded to your data backend ensuring that the data displayed is as fresh as it is in your storage.

However, in that case, keep in mind that your backend response time must be as short as possible and your data tables queried must remain small.

Thus, here is a few tips:

- Your data has to be prepared before being displayed in Toucan apps

- Post-processes can be used but should be avoided because Toucan uses its in-memory process to run them: each second counts when it comes to rendering

- Multiple queries should be avoided at all costs as they slow down live data screens. Prepare your data instead.

Furthermore, to use live data, you have to make sure that your data infrastructure is meant to serve data over the internet to a user:

- Your data backend reliable and fast. It is ready to respond to our live queries at any time and it responds within a web application usage context (sub-second latencies to avoid the end-user seeing an empty screen).

- Your database or information system can be accessed over encrypted connections (TLS) to make sure data will travel encrypted over the internet.

See the documentation.

Loaded data (MongoDB)¶

If you do not want to or cannot use live data, Toucan offers to store the data you provide, within the limit of 10 Gig.

Toucan Toco uses MongoDB technology to store data.

- Each domain (=dataset than can be used within Toucan) created is a MongoDB collection.

- Each line of your dataset is a MongoDB document.

- Each column of your dataset is an attribute in MongoDB.

For a Toucan Toco application that contains 50 data sources stored, it means that the Toucan Toco application has 50 MongoDB collections.

To make sure the stored data is displayed as quickly as possible follow these 2 simples rules:

Groups : avoid duplicates¶

First good practice is to make sure the data queried on your screens only contains the values that will be displayed!

You are creating a dataset to add a requester to your screen?

=> Make sure you grouped your dataset on the column you will be using! If your requester should display only 5 different values: your dataset should also contain no more.

Let’s imagine you want to display world regions in your requester: group on your “REGION” column for example.

Do as many groups as required.

You’re building your app: Which elements should you keep in mind?¶

Limit the size of your datasets with filters¶

Filters allow you to display only specific lines of your dataset.

Important

When you’re building your story, it’s important to start your query pipeline by all the filtering operations.

In fact, if you’re using the result of your date requester, report requester or if you need to apply any condition that might filter your dataset (reduce the amount of rows) then start your YouPrep™ pipeline with this operation. All the next YouPrep™ steps will be applied on less data and your story performance will be increased 🚀.

Limit the size of your datasets with Requesters¶

You still have thousands of rows in your final datasets and you’re using filters on your story?? You can switch your filters by requesters

Important

Requesters allow your web browser to only retrieve the lines of the dataset that will be displayed.

How? The query of the main dataset will be filtered with the value of the requester: the data will arrive filtered on the screen. It results in a lighter dataset and faster rendering.

You have to order your requesters to make them more efficient.

See the documentation on filter VS requesters.

Use as much as possible YouPrep™ as dataprep¶

When you’re working with your datasources, you may have to repeat the same operations on your data in different tiles/stories.

Save time and use YouPrep™ as dataprep! Only do things once.

Read the following documentation if you need any further informations.

Important

Preparing your data will drastically increase the performance

YouPrep™ as dataprep will give you the opportunity to prepare your operation before using it on your story. It’s the perfect tool for all time consuming operations (such as join, append, compute evolutions…)

Your app is laging: Let’s add some Mongo Indexes¶

You should use MongoDB indexes to speed up your data queries and thus, your rendering.

What are MongoDB indexes?

Important

We can make the comparison between all your datasets and a library. If all the books or not ordered it could be really painful to find the book you’re looking for. But if your library is ordered by categories/color/author then it’s going to be a child’s play

book

Without indexes, MongoDB has to do a collection scan i.e. scan every document of your collection to see if it matches the query statement or a short collection, you won’t see any difference in terms of rendering but the difference will be huge for bigger collections (a few thousand lines for example). Indexes can accelerate your queries by limiting the number of documents (=lines) to scan.

Indexes can be created on any attribute of a document: they allow MongoDB to know where to find data that will match your query statement quicker.

See the official doc for more information.

MongoDB indexes are described in the etl_config file. You can create

indexes for each domain. Each domain is a key in the MONGO_INDEXES

configuration block.

MONGO_INDEXES:

domain_a: [

‘year’

[‘city’, ‘kpi_code’, ‘version’]

[‘city’, ‘entity’, ‘version’]

]

domain_b: [

[‘date’, ‘filter’]

]

The domain domain_a have 3 indexes :

- the 1st is an index on a single field

year - the 2nd and the 3rd are compound indexes.

Important

The best way to have efficient Mongo indexes is to analyse your query structure. If you’re always filtering your datasets with the same columns then it could be a great idea to add a Mongo index on those columns

For compound indexes, the order of the fields is important in the index but not in the query. In addition to supporting queries that match on all the index fields, compound indexes can support queries that match on the prefix (a subset at the beginning of the set) of the index fields.

- Success with the index `[‘city’, ‘kpi_code’, ‘version’]`

coffee query: kpi_code: “CA” city: “Paris” version: 7

- Success with the index

[‘city’, ‘kpi_code’, ‘version’]coffee query: city: “Paris” kpi_code: “CA” - Success with the index

[‘city’, ‘kpi_code’, ‘version’]but only forcitycoffee query: city: “Paris” version: 7 - Failure with the index

[‘city’, ‘kpi_code’, ‘version’](city is missing)coffee query: version: 7 kpi_code: “CA”

Warning

Creating indexes everywhere for everything is not a magical solution. It is time and memory consuming.

Our Data Request Sandbox displays performance reports for your queries, with information such as the usage and the pertinence of your indexes.

Tadaaa!