Advanced: update with confidence¶

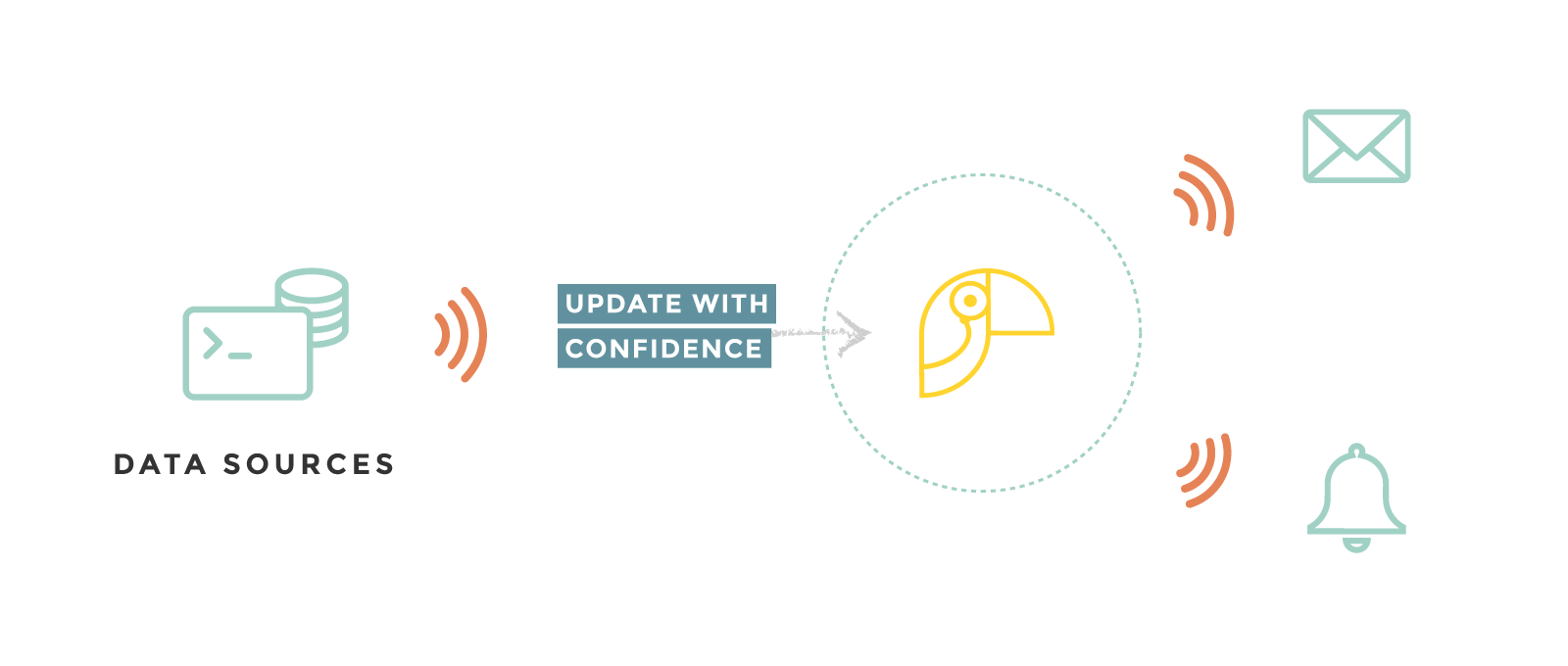

Automate your operations with Toucan Operations API¶

Updating your Toucan Toco smallapps’ data can be fully automated thanks to our API:

- you can automatically update your data sources

- you can automatically launch a data preprocess job

Upload your updated datasources files¶

Basically you just need to run the following command:

curl -X POST \

--user "${LOGIN}:${PASSWORD}" \

-F data="{\"filename\": \"${FILE_NAME}\"}" \

-F file=@${FILE_NAME_LOCAL_PATH} \

-F "dzuuid=<RANDOM STRING>;type=text/plain" \

-F "dztotalfilesize=<NUMBER OF BYTES IN THE FILE>;type=text/plain" \

-F "dztotalchunkcount=1;type=text/plain" \

-F "dzchunkbyteoffset=0;type=text/plain" \

-F "dzchunkindex=0;type=text/plain" \

"https://${API_URL}/${SMALL_APP}/data/sources?validation=false"

Where:

$LOGINand$PASSWORDare the credentials of a user with “contributor” right on a small app$FILE_NAMEis the name of the data source as defined in the smallapp configuration$FILE_NAME_LOCAL_PATHis the path to your file on the computer/server where the cUrl command is launched$API_URLis the URL of your Toucan Toco backend$SMALL_APPis the name of the smallapp you want to update

Tutorial : Product Corporation

Let’s say we want to upload all files in the directory

my_datasources:LOGIN="<LOGIN>" PASSWORD="<PASSWORD>" API_URL="<API_URL>" SMALL_APP="<SMALL_APP>" for FILE_NAME_LOCAL_PATH in my_datasources/*; do FILE_NAME=`basename ${file_to_send}` curl -X POST \ --user "${LOGIN}:${PASSWORD}" \ -F data="{\"filename\": \"${FILE_NAME}\"}" \ -F file=@${FILE_NAME_LOCAL_PATH} \ "https://${API_URL}/${SMALL_APP}/data/sources?validation=false" [[ $? -ne 0 ]] && echo "Problem when uploading ${FILE_NAME_LOCAL_PATH}" done

Launch Populate and Release Job¶

Once you finished to update all your data sources, you may want to trigger a populate job and release your data, and it’s possible thank to the following command:

curl -X POST \

--user "${LOGIN}:${PASSWORD}" \

-H 'Content-Type: application/json' \

--data-binary '{"origin": "autofeed", "operations": ["preprocess_data_sources", "populate_basemaps", "populate_reports", "populate_dashboards", "release"], "async": true}' \

"https://{$API_URL}/${SMALL_APP}/operations?stage=staging¬ify=false"

Where:

$LOGINand$PASSWORDare the credentials of a user with the right to update the data sources$API_URLis the URL of your Toucan Toco stack$SMALL_APPis the name of the smallapp you want to update

Launch a partial data preprocess job¶

Populate and release only a part of your datas :

- by input domains: preprocess all the output domains that contains any of the listed input domains;

- by function names: preprocess all the output domains that has this function as entry point;

- by output domains.

All these entries are optional, if they are combined, the result is the union of all the domains returned and not their intersection.

This examples will start the operations for all output domains taking

the input domain named referential.

Preprocess¶

curl -X POST "https://{$API_URL}/${SMALL_APP}/data/preprocess?stage=staging" --user "${LOGIN}:${PASSWORD}" -H 'Content-Type: application/json' -d '{"input_domains": ["referential"], "async": true}'

Populate¶

curl -X POST "https://{$API_URL}/${SMALL_APP}/populate?stage=staging" --user "${LOGIN}:${PASSWORD}" -H 'Content-Type: application/json' -d '{"input_domains": ["referential"], "async": true}'

Populate and Release¶

curl -X POST "https://{$API_URL}/${SMALL_APP}/populate?stage=staging&release¬ify=false" --user "${LOGIN}:${PASSWORD}" -H 'Content-Type: application/json' -d '{"input_domains": ["referential"], "async": true}'

Where:

$LOGINand$PASSWORDare the credentials of a user with the right to update the data sources$API_URLis the URL of your Toucan Toco stack$SMALL_APPis the name of the smallapp you want to update

Python API client¶

Monitoring data operations with notifications¶

Once your data updates are fully automated it’s good to monitor that everything is running smoothly. You will do this using Toucan Toco built-in notifications system.

The names of the relevant event names to configure these notifications are :

smallapp.after_operation_successsmallapp.after_operation_failuresmallapp.from_augment(this last one can be emitted inside a preprocess function).

Tutorial : Product Corporation

This is an example of a configuration that sends an email if a “release” operation has failled to the person at Product Corporation that started the operation.

etl_config.cson:

NOTIFICATIONS: [{ event: 'smallapp.after_operation_failure' handler: 'failed_release' sender: [{ channel: 'email' template: 'operation_failure' }] }]We are assuming you have defined a template called ‘operation_failure’.

notification_handlers.py:

import datetime import calendar from app.signal_manager.builtin_handlers import DO_NOT_FILTER def failed_release(operation, **kwargs): if operation != "release": return None return DO_NOT_FILTER